Vector Synthesis

An investigation into sound-modulated light

Performing with Voltage and Vectors

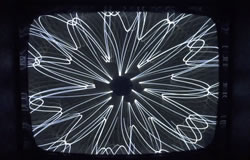

The Vector Synthesis project is an audiovisual, computational art project using sound synthesis and vector graphics display techniques to investigate the direct relationship between sound+image. 1[1. The term “vector synthesis” refers to the synthesis of analogue vector graphics and accompanying audio, and should not be confused with waveform-mixing sound synthesis technique introduced by Sequential Circuits in 1986.] It can be presented as a live performance or a generative installation. Driven by the waveforms of an analogue synthesizer, the vertical and horizontal movements of a single beam of light trace shapes, points and curves with infinite resolution, opening a hypnotic window into the process by which the performed sound is created.

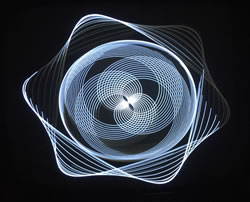

Technically, the work began based on the well-known principle of Lissajous figures, which are mathematical representations of complex harmonic motion. Originally displayed by reflecting light between mirrors attached to a pair of vibrating tuning forks, we are most used to seeing them on the screen of an oscilloscope, where they can be produced using pairs of electronic oscillators tuned to specific ratios. A 1:1 ratio can produce a line, circle, rectangle or square, depending on the waveform and phase of the oscillators being used. A 1:2 ratio produces a figure with two lobes, a 1:3 ratio produces a figure with three lobes, and so on. By connecting the same signals to a loudspeaker, so that it is possible to see and hear the same signal at once, one can draw a direct correlation between the visual shapes of the figures and the musical harmonics. Interestingly, Lissajous figures are also used in high-end audio equipment to monitor the phase relationship between the two channels of a stereo signal — an important step in mastering for vinyl record production.

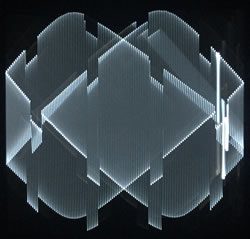

The most important piece of equipment in this setup is the cathode ray tube (CRT). One’s relationship to the venerable CRT depends very much on their age. So many of us grew up with one constantly around in the form of a television set, while the younger generation live in an era where these objects are rapidly realizing their manifest destiny as e-waste. Unlike modern flat screens, CRTs draw their image with a single beam of light shot from an electron gun at the back of the tube against a phosphorescent film inside the front of the tube, which gives them their unique visual characteristic.

Conventional video images are said to be “raster scanned”, i.e. they have been quantized to a grid of horizontal scan lines traced by this light beam, so that the image you see is drawn one line at a time by a left-to-right motion combined with variations in the intensity of the beam. In contrast to this, the light beam of an oscilloscope or vector monitor (such as the one I use) can freely move horizontally or vertically at tremendous speed, responding directly to the amount of voltage sent to control it, and is limitless rather than constrained in its resolution.

I use two different vector monitors with this process: one is a Panasonic VP 3830 designed for technical measurements in a laboratory, the other a Vectrex video game console from approximately 1983, which I have modified according to the instructions of Andrew Duff (2014). Each has three main control inputs to control its light beam: the horizontal axis, the vertical axis and the z-axis (an adjustment of the brightness of the beam).

My first entirely analogue explorations of these monitors involved a self-made DC (direct current) voltage mixer that allowed me to combine up to four analogue signals to each of the monitor’s inputs, control the individual and overall levels of those signals, and add an offset voltage if necessary to move the entire image up and down, or left and right. With various voltage-controlled oscillators, I was able to create basic Lissajous shapes from different waveforms, modulate the frequencies and amplitudes of those wave shapes, and in a fairly haphazard way derive more complicated shapes from a combination of waveforms sent to each axis and to the brightness control.

I quickly realized, however, that obtain more precise, controllable and reproducible results, I would need to create the figures with digital signals. To this end, I created the Vector Synthesis Library. This digital signal processing toolkit allows the creation and manipulation of 2D and 3D vector shapes, Lissajous figures, and scan-processed image and video inputs using audio signals sent directly to oscilloscopes, hacked CRT monitors, Vectrex game consoles, ILDA laser displays or oscilloscope emulation software using the Pure Data programming environment.

Eschewing the popular, ready-made algorithms used by applications like Processing and OpenFrameworks to instead code my own 2D and 3D visualization systems using audio waveforms put me in touch with the earliest roots of computer graphics. Rather than relying on the experimental approach of the analogue oscillators, here I had to engage with actual mathematics. Soon the shelves in my studio were filled with out-of-print technical textbooks from the 60s and 70s describing matrices, projections, beziers and splines — all of which are quite daunting for someone who failed just about every math class they were forced to take in their life! Even more fascinating were the accounts from the early pioneers of the medium such as John Whitney Sr., who described the amount of rendering, photographing and film-processing time required for one 20-second sequence as “agony”. Or his brother James, who was so traumatized by the experience of making Lapis (1966) with a modified WWII anti-aircraft gun computer that he never made another computer-generated film again in his life.

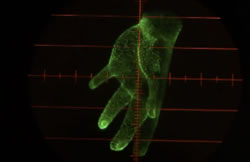

The scan processing section (developed in parallel with a Max/MSP implementation by Ivan Marušić Klif) is particularly exciting. It is based on the same process as the Rutt-Etra video synthesizer from the 1970s, which allowed direct manipulation of the video raster. One could think of it as using sound to display low-resolution video, and I have explored it using live webcam feeds as well as stored pictures and film clips. Along with some of the 3D model rendering functions, it brings a deliberately figurative element to the abstract, geometric space of the vector graphics.

The main source of the signals I use to alter the shapes of the images is an analogue synthesizer known as the Benjolin, a standalone synthesizer designed by Rob Hordijk from the Netherlands (Fig. 4). It contains two voltage-controlled oscillators, a voltage-controlled filter and a circuit called a Rungler, which allows chaotic cross-modulation possibilities between the different parts of the circuit. Hordijk refers to the Benjolin as a circuit which has been “bent by design.” I have been working with this instrument for several years now, both performing with it regularly as well as building expanded and customized versions of it for myself and other artists.

My live performances and studio setups so far have involved two of these Benjolin circuits, a laptop running Pure Data, a DC-coupled soundcard (a MOTU Ultralight Hybrid) and the vector monitor. Once I have created the core shape with patches from the Vector Synthesis Library, I can then go on to modulate it with the more intuitive and immediate interface of the analogue synthesizers. The combined signals of the digital vector shapes and the Benjolins are also heard through the loudspeaker. In this way, a very direct relationship between image and sound is preserved.

Digital recording and projection of the CRT vector graphics has so far been the most frustrating element, as it has been impossible to perfectly reproduce the depth, movement and details I see on the screen with any camera available to me. While in the studio, I employ a DSLR camera with a large aperture lens for what is often called “rescanning” the vector images; for live performances I follow the adage that the best camera for the job is the one you have with you, and connect my iPhone camera directly to the venue’s projector. There is no lost irony in the fact that although my “primitive” visual system requires a fast modern computer and high-resolution sound card, or that while some of my favourite vector images were displayed on a tube oscilloscope from the 1950s that weighs perhaps 20 kg and produces enough heat to toast bread, I present my work to the public using the smallest, most utopian electronic device of the moment.

One could sum up the main benefits of working with analogue vector graphics for abstract audiovisual synthesis as follows:

- Analogue vectors are generated by a fairly low-bandwidth, audio frequency-range signal which can be produced by very common sound hardware and software (as opposed to video signals which require frequencies of 15.625 kHz – 6.75 MHz to produce detailed results), thus negating the need to invest in upgraded equipment (an important factor for novices, or for workshop situations).

- As opposed to conventional raster graphics, analogue vectors have a nearly infinite graphic resolution, constrained only by the size and sharpness of the beam in relation to the total monitor area. (There are issues of signal bandwidth that do come into play, however.)

- The control systems for vector synthesis are highly intuitive and the results immediately tangible.

- Vector synthesis maintains a direct, non-symbolic relationship between sound and image due to the fact that both are derived from the same signal.

I would like to explicitly point out that there is nothing new about this process, although I would not necessarily consider that a problem. Think of how many novel controllers, interfaces and instruments are abandoned precisely at the moment when their newness wears off, and long before any sort of virtuosity can manifest itself. 2[2. Michel Waisvisz’s “The Hands” being a classic example to the contrary, it was an instrument where — from a certain point — technological development was consciously minimized in order to maximize the process of developing a performing language with it.] Artists and scientists have explored the use of Lissajous patterns and other mathematically derived patterns (take the work of Ernst Chladni, for example) long before there were analogue computers, voltage-controlled synthesizers, cathode ray tubes or lasers. And once all these elements were in place, there is a wealth of such experiments from the 1950s onward by major figures such as Mary Ellen Bute, John Whitney, Larry Cuba, Manfred Mohr, Nam June Paik, Ben Laposky, and Steina and Woody Vasulka for the curious eye and ear to explore.

However, I also strongly believe that techniques and technology take on a radically different context once they move from the realm of cutting edge or contemporary to the status of abandoned, outdated or obsolete. In the past decade, the cathode ray tube has reached this status of what Garnet Hertz and Jussi Parikka call “zombie media” — a commercially buried format resurrected by wilful misuse and creative experimentation. The current vogue of analogue sound and video synthesis has produced a new generation of artists working with Lissajous patterns, vector graphics and Oscilloscope Music, and I would count among my peers Robin Fox, James Connolly and Kyle Evans (as Cracked Ray Tube), Jerobeam Fenderson, Benton C. Bainbridge and a whole community of intrepid synthesists on the Video Circuits forum in these adventures.

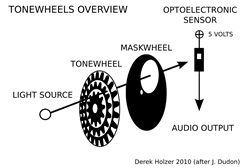

Utopias and Dystopias in Media Archaeology

Vector Synthesis is the third project I have undertaken with a reference to the discourse of media archaeology, and the second that explores the relationship of electronic sound and light. The first project in the media archaeology series, Tonewheels (2007–14), drew on the century-old technology of optical film soundtracks. Inspired by some of the pioneering 20th-century electronic music instruments, such as Edwin Emil Welte’s Licht-Ton-Orgel (Light-Tone Organ, Germany, 1936), the ANS Synthesizer (Evgeny Murzin, USSR 1937–57) and the Oramics system (Daphne Oram, UK, 1957–62), I developed electronic and graphical systems for modulating sound with light. In this live performance, transparent tonewheels printed with repeating patterns are spun over the same kind of light-sensitive electronic audio circuitry as can be found in a 35 mm movie projector, producing pulsations and textures of sound and light (Fig. 7). Using only overhead projectors as light source, performance interface and audience display, Tonewheels aimed to open up the black box of electronic music and video by exposing the working processes of the performance for the audience to see.

The second media archaeology work, Delilah Too, proposed a model of voice encryption based on the voice scrambling capabilities of the vocoder, a device far better known for its role in the history of electronic music than for its cryptologic potential. While this work did not involve any light-based processes, it did give me the opportunity to dive into texts related to this discourse more deeply, in particular the writings of Friedrich Kittler.

In this media archaeological context, it is important to point out that the history of electronic sound is more than one hundred years old, and since nearly the beginning it has been linked to light — either as accompaniment to the century of cinema which closely followed it, or actually using the optical sound reproduction technology of cinema directly as a means of creating abstract or performerless sound.

Throughout these more-than-a-hundred years, the goals and utopias of electronic sound have changed little. We still aspire to imitate existing instruments, to synthesize previously unheard sounds or to realize complex works without need of an orchestra. Likewise, the dystopias have changed little. Electronic sound remains a clumsy approximation of acoustic sounds, it still employs alienating, cold, un-emotive timbres and tones, and there still remains a fundamental disconnect between the source of the sound and what is heard. This on top of the fact that, as Kittler writes, “the entertainment industry is, in every conceivable sense of the word, an abuse of army equipment” (Kittler 1999, 96–97).

Vector Synthesis also references the long history of pre-digital computing, where calculations were made by connecting the various analogue signal generators and processors of massive, patchable systems, and where results were displayed as graphs and functions on a CRT monitor. One of the first practical applications of the analogue computer was in controlling the trajectories of German V2 rockets as they traced their rainbow of gravity from Flanders towards London during the Second World War. And as Kittler observed, the relationship of media technology to military tools of destruction was sealed by moments such as these.

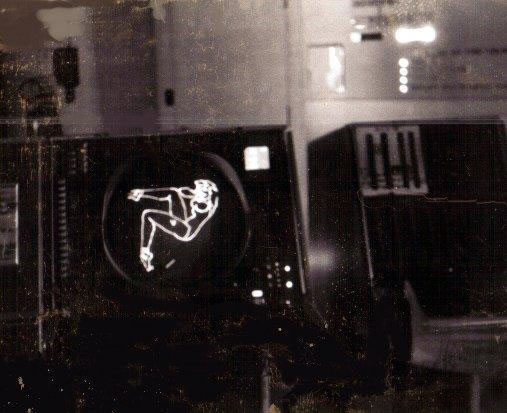

Post-war developments continued in this direction. Tennis for Two, a proto-Pong game programmed in 1958 by William Higinbotham on an analogue computer at Brookhaven National Laboratories in Long Island NY (USA), used an oscilloscope as the display. The laboratory itself performed government research into nuclear physics, energy technology and national security. Indeed, as Bej Edwards writes, some of the earliest computer art — a vector representation of a Vargas pinup girl — was created by anonymous IBM engineers as a test screen for the SAGE (Semi-Automatic Ground Environment) defence computer of the US Air Force in the late 1950s (Fig. 8).

In the early 1960s, Don Buchla redesigned the existing function generators of analogue computers to respond to voltage controls of their frequency and amplitude. This gave birth to the patchable, analogue modular synthesizer, which was subsequently expanded by others such as Bob Moog and Serge Tcherepnin. I think of this often when patch-programming my own modular systems, remembering that the signals I am creating are just as much mathematical expressions as they are movements of speakers or flashes of light.

Vector graphics were widely adopted by video game manufacturers in the late 1970s. Perhaps the most iconic of these games is Asteroids, a space shooter released by Atari in 1979. Other arcade games such as Battle Zone (1980), Tempest (1981) and Star Wars (1983) all stand as other notable examples, and also as rudimentary training tools for the future e-warriors who would remotely guide missiles into Iraqi bunkers at the start of the next decade.

My own personal interest in analogue vector graphics isn’t merely retro-for-retro’s sake. Rather, it is an exploration of a once current and now discarded technology linked with these specific utopias and dystopias from another time. The fact that many aspects of our current utopian aspirations (and dystopian anxieties!) remain largely unchanged since the dawn of the electronic era indicates to me that seeking to satisfy them with ideas of newness and technological progress alone is quite problematic. Or as Rick Prelinger puts it, “the ideology of originality is arrogant and wasteful” (Prelinger 2013). Therefore, an investigation into tried-and-failed methods from the past casts our current attempts and struggles in a new kind of light.

Bibliography

Duff, Andrew. “Vectrex Minijack Input Mod.” 2014. http://users.sussex.ac.uk/~ad207/adweb/assets/vectrexminijackinputmod2014.pdf

Edwards, Benj. “The Never-Before-Told Story of the World’s First Computer Art (It’s a Sexy Dame).” The Atlantic. 24 January 2013. http://www.theatlantic.com/technology/archive/2013/01/the-never-before-told-story-of-the-worlds-first-computer-art-its-a-sexy-dame/267439

Hertz, Garnet and Jussi Parikka. “Zombie Media: Circuit bending media archaeology into an art method.” Leonardo 45/5 (October 2012), pp. 424–430.

Holzer, Derek. Vector Synthesis Library. 2017. http://github.com/macumbista/vectorsynthesis

Hordijk, Rob. “The Blippoo Box: A Chaotic electronic music instrument, bent by design.” Leonardo Music Journal 19 (December 2009) “Our Crowd — Four Composers Pick Composers,” pp. 35–43.

Kittler, Friedrich A. Gramophone, Film, Typewriter. Trans. Geoffrey Winthrop-Young and Michael Wutz. Stanford CA: Stanford University Press, 1999.

Klif, Ivan Marušić. REWEREHERE. 2017. http://i.m.klif.tv/rewerehere

LZX Industries. “Frequency Ranges.” http://www.lzxindustries.net/system/video-synchronization

Patterson, Zabet. “From the Gun Controller to the Mandala: The cybernetic cinema of John and James Whitney.” In Mainframe Experimentalism: Early computing and the foundations of the digital arts. Edited by Hannah B. Higgins and Douglas Kahn. Berkeley and Los Angeles CA: University of California, 2012.

Prelinger, Rick. “On the Virtues of Preexisting Material.” Contents 5 (January–April 2013). http://contentsmagazine.com/articles/on-the-virtues-of-preexisting-material

Torre, Giuseppe, Kristina Andersen and Frank Baldé. “The Hands: The Making of a Digital Musical Instrument.” Computer Music Journal 40/2 (Summer 2016) “The Metamorphoses of a Pioneering Controller,” pp. 22–34.

Vasulka, Steina and Woody Vasulka. “Bill Etra & Steve Rutt.” In Eigenwelt der Apparatewelt: Pioneers of electronic art. Edited by David Dunn. Ars Electronica, 1992.

Whitney, John, Sr. “Experiments in Motion Graphics.” 16 mm film, colour, sound. 11:54. IBM, 1968. Available at http://youtu.be/x-iZBVhKqzM

Social top