“LEXICON” — Behind the Curtain

This article is based on a Keynote Address given by the author at the Tape to Typdef conference at the University of Sheffield (UK) on 1 February 2013.

Background and Context

LEXICON (2012, for 8-channel fixed sound and video) is a work dealing with dyslexia, a common and often debilitating condition sometimes referred to as “word blindness”. It arose from the opportunity to work with a group of dyslexia researchers at Bangor University (Wales), and is inspired both by the ideas emanating from current dyslexia research and by the experiences of people with dyslexia. It takes as its starting point a poem by a twelve-year-old boy with dyslexia (“Tom”) in which he tries to articulate his relationship with written text. For LEXICON I asked several people with dyslexia (including the now grown-up author) to read the poem in its original “dyslexic” form, and used the sounds of these readings as the source material for the work. The idea was to capture in these readings a sonic trace of some of the mental and practical struggles that people with dyslexia face in day-to-day life.

One of the ideas which the piece explores is the idea of the “mistake” as less of a transgression, and more a creative opportunity. For example, in his poem Tom intends to write “Words are like leaves blowing in the wind” (which is an evocative enough image in itself) but instead he writes “Words are like lifes…” which suggests an additional, unintended layer of metaphor — that, for those with dyslexia, life itself (or indeed many lives) are somehow chaotic, uncontrolled, confused. When asked to read the poem, one of the readers read this line as “Words are like flies…” which presents another unintended yet powerful and somewhat darker image. These two “mistakes” led to the unexpected idea of a whole section of the piece based on the swarming of flies.

Acousmatic Music — With video?

LEXICON might be considered by some to be an audiovisual composition or a multimedia work. I prefer to describe it as acousmatic music with video, but this raises an obvious question. The term “acousmatic” is usually reserved for music without any visual element, taking its name from the legend of Pythagoras’ acousmatikoi, who listened to his lectures from behind a curtain without ever seeing him. 1[1. Diogenes Laërtius, Lives and Opinions of Eminent Philosophers, Book VIII.] Acousmatic music is surely therefore “invisible” music, so is not the presence of a visual element at odds with the term “acousmatic”?

Of course, there is no denying that listening to LEXICON while watching the video offers a very different experience to listening without it (in true acousmatic mode). Yet, as an acousmatic composer, my concern in LEXICON remains primarily with the invisible world of sound, hidden from view, as it were, by the veil of loudspeakers. The video then addresses the question: if we could see beyond the veil, what might we see?

For me the experience of composing this, my first video piece, has helped to clarify some of my thinking about what acousmatic music (without video) really is. Rather than undermining the acousmatic aspect of the work, I found that working with video seemed to offer analogies with the acousmatic world which throw some light on it, the world of images somehow offering a metaphorical glimpse of the invisible world that lies beyond Pythagoras’ curtain. In particular, working with video has helped me to clarify what I think of as the four “articles of faith” of acousmatic music.

1. There is Nothing to See — And We Know It

Firstly, acousmatic music is unseen music. This is not just a description of a situation, but an announcement of an intent. That there is “nothing to see” is not in itself a defining characteristic of acousmatic music; it is, rather, that we intend there to be “nothing to see”. Within the term “acousmatic music” the word “acousmatic” is used not simply in its literal sense of that which is heard without being seen. In that case a Beethoven string quartet heard on CD would be acousmatic music (since we cannot see the players), as would be Beethoven listened to in a concert hall with our eyes closed, or Beethoven played by street musicians just around the corner from us. But this is not “acousmatic music” and Beethoven is not an acousmatic composer, because while his music may be invisible he does not intend it to be, and therefore does not take its invisibility into account when composing it; invisibility is not an essential aspect of its conception and, while we may choose to listen to it “acousmatically”, to do so is not essential to its reception. Acousmatic music, then, is not just invisible music, but music whose invisibility is an essential aspect of its æsthetic function and a conscious aspect of the creative process behind it — there is nothing to see, and we know it.

The primary use to which acousmatic composers put this awareness of invisibility is to take advantage of Schaeffer’s famous reduced listening — temporarily discarding what we think we know about the real-world origins of sounds (their sources, contexts or meanings) in order to gain a clearer and more detailed apprehension of the qualities of the sounds themselves. The term “reduced listening” places the emphasis on reduction: reducing the scope of our consideration of the sound by jettisoning considerations beyond the heard acoustic phenomenon itself; but in fact, it may be more accurate to speak of enhanced listening 2[2. Not to be confused with the “expanded listening” coined by Jonty Harrison, which term refers to the seamless incorporation of real world associations into the consideration of sound materials (Palmer 2002).], since we are not only losing something from our apprehension of the sound, but also gaining something. What are we gaining?

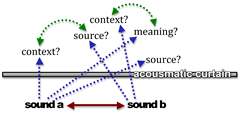

First, clearing our thoughts of the distracting and sometimes misleading associations of source leaves us free to focus on a new appreciation of the details of the sound in itself: its shape, its colour, its texture, its internal detail, the way that it changes over time. Second, severing the connection with the original source turns the sound into a “free agent” which may more readily connect with other sounds. These sound-to-sound connections are made not on the basis of similarities of source, context or meaning, but rather on the basis of purely acoustic characteristics: sonic connections replace semantic ones. Third, having disconnected the sound from its original source, context or meaning, we are free to re-connect it with other sources, contexts and meanings; and these may be real, implied or imaginary. Fourth, we may make connections directly between these sources, contexts and meanings. In a way, having deliberately interposed the impenetrable barrier of the Pythagorean curtain between real sources and heard sounds, we now deliberately seek to penetrate it in order to make new sound-to-source connections which would have been improbable otherwise (Fig. 1).

Nothing to See?

When it comes to the question of working with video, the maxim “nothing to see” does seem somewhat problematic to say the least. However, from my experience of working on LEXICON there is at least some common ground between working with sound acousmatically and working with video. Chief among these is the visual analogue of reduced listening: just as one can separate a sound from its source, so one can separate visual images from their original source (or context, or meaning) to bring about a sort of reduced seeing (or perhaps better, enhanced seeing). In LEXICON this process is greatly helped by using only written text, words and letters as visual source material.

Words as we encounter them in everyday use have an obvious meaning and context, but if we see a single word on the screen, we no longer have a context; this also often means that we are not completely sure of the meaning. If instead of a complete word we see a few letters, we have even less of an idea of the original meaning, and after a while of seeing such meaning-free letters, we start to become aware of them in a new way: as shapes and forms, lines and curves. We become able to perceive them as visually abstract material, an ability which the distractions of the much more powerful layer of “meaning” normally deny us (Example 1, 0:00–01:40). 3[3. All examples cited can be heard and seen on the author’s Vimeo profile, on the page “Andrew Lewis — LEXICON (complete),” posted Spring 2013.]

As with reduced listening, the idea here is not simply to turn letters into isolated, abstract forms. It is rather, having disconnected them from their original contexts, to allow them to form new connections; connections with other similarly abstracted forms, but also connections with other meanings, and even with sounds.

An illustration of these breaking and re-forming links is found in a passage in the middle of LEXICON (Example 2, 7:43–9:02). It involves the written words “lifes” and “flies”. When we are presented with a juxtaposition of the two words, it is immediately obvious that though they are unconnected in meaning, “lifes” and “flies” are closely connected as shapes. Soon after this, we see the shape of the word “flies” dissolve into an abstract particle cloud, at which point the typographical sign has been destroyed and the link with meaning momentarily severed (thus we are invited to use reduced seeing). But then the link with meaning is re-established through motion and behaviour, as the particles start swarming like flies. Finally, the individual particles are seen to be the word “words”, yet their behaviour continues to suggest that of flies.

This whole sequence has strong resonances with dyslexia. Engaging with written text involves making connections: connecting abstract signs with their meaning, connecting signs with sounds, and connecting sounds with meaning. Dyslexia is normally described as a deficit in the ability to make these connections, but in LEXICON I wanted to suggest a different view: just as the idea of reduced listening (the severing of connections between sound and meaning) opens up new possibilities for the apprehension and appreciation of sound, perhaps we could take a similar view of dyslexia; rather than describing dyslexia’s disconnect between sign, sound and meaning as a “deficit”, we could instead see it as a freeing of the shackles of conventional signification, which can then offer new creative possibilities.

This idea is reflected in a passage near the end of the piece (Example 3, 13:28–14:23). We hear words spoken clearly and intelligibly, along with those same words in heavily filtered form, such that their original meaning cannot be determined from sound alone: they have been “reduced” to abstract pitch shapes. Yet we can still connect the abstract sounds to meaning because we recognise in them the same pitch shapes as are found in the intelligible words. At the same time, we see the text of these same words, first clearly and then similarly reduced to abstract shapes of coloured light; and again, we can connect abstract and meaningful versions by their similarity of shape. This is all a play on the idea of the “correct” but perhaps prosaic meaning of the words versus their abstracted and yet more powerfully imaginative and evocative “meaningless” forms.

As a final manifestation of the “nothing to see” principle, LEXICON also contains acousmatic episodes; that is, periods when the video is blank and only sound is heard. This compels the audience to adopt an acousmatic listening mode, partly as a reminder of the primacy of sound in the work. Ironically perhaps, the longest acousmatic episode is made up of recognisable, anecdotal sounds — the sound of a forest on a windy day, with leaves blowing around (Example 4, 10:10–11:45). So while there is indeed nothing to see, we are not required to use reduced listening; instead, very powerful mental images of a real-world situation are evoked, and these contrast with the visible images in the rest of the piece which depict only text or abstract shapes.

Interestingly, this is one of the moments of the piece that elicits the strongest reactions from audiences; while some like it, others find it disconcerting, with one person remarking, “I felt like someone had just turned off the TV.” This is especially fascinating given that LEXICON has most often been performed as part of concert programmes in which all the other pieces are acousmatic (that is, sound only), and perhaps this says something about the sheer power and dominance of the visual in contemporary society, at the expense of sound: being made to just listen is uncomfortable, even for those who have paid good money to do precisely that!

Society’s obsession with the visual extends even to the field of interventions for dyslexia. Conventional understandings of dyslexia and strategies for dealing with it have focused very much on the visual. Many people with dyslexia have been encouraged to use coloured filters when reading text, and many have reported dramatic effects in their ability to pick out words accurately; but more recent research has shown that such techniques produce no measurable effect at all. Instead, dyslexia research is now pointing to the important role of phonological processing — the process of interpreting the sounds of spoken words and linking these to the letters that represent them. In short, the message from much current dyslexia research is that sound is more important than we think. Yet this seems a hard message for a visually obsessed culture to hear; despite hard data on the importance of sound and the ineffectiveness of coloured filters, the filter technique continues to be used, and those for whom it is recommended continue to insist that it is helping them.

2. Fixed Medium

Secondly, and for me at least, acousmatic music means fixed-medium music. The technologies used by composers today present us with many more possibilities than when electroacoustic tools first began to be used, and this is especially true in the area of real-time processing, from live instrumentalists interacting with computers to laptop improvisation. It can be tempting to imagine that these newer ways of working somehow supersede the rather archaic approach of fixed-medium composition; but the composers who pioneered fixed-medium composition from the late 1940s onwards did not do so simply because real-time processing was not yet available; they did so because fixed medium was available. Recording and editing sounds on tape was never regarded as a an inferior alternative to transforming them “in real time”, but was rightly seen as a new and exciting technological approach in its own right, which made possible a way of working that was previously impossible, and that was alive with unexplored creative potential. And the creative possibilities of recording and editing fixed sound remain as potent today as they were then.

Working in a fixed medium profoundly affects the way we engage with sound, both as composers and as listeners. It allows composers to create music in which the tiniest detail of sound is important; transforming ephemeral, transient detail into permanent, significant detail. Its ability to do this has nothing to do with real-time processing, but arises precisely from the possibility of engaging with sound out of real time. It allows us to ponder our sounds, to reflect on them, to put them under a microscope, to thoughtfully consider their possibilities and to search diligently within them for the inner life they may contain. It allows us (as I often encourage my students) to “first find, then seek”: in other words, having discovered and recorded our sound materials (the “finding” part), then to spend plenty of time experimenting with those sounds, “seeking” the creative potential within them in order to liberate their innate and latent possibilities. This process is necessarily reflective, detailed, iterative and, most importantly, unconstrained by real time.

As for listeners, fixed medium enables us to engage with sound in a much more detailed way. It can do this because it is repeatable: we can hear the same piece over and over, and when we do so the piece (if not the experience) will be exactly the same in every detail (variations in listening conditions notwithstanding). This means we can become intimately acquainted not just with the musical idea, but with the sound itself, and this in turn means that the sound is no longer some sort of carrier of the musical idea: the sound is the musical idea. 4[4. Incidentally, this can also happen with our earlier example of Beethoven heard on CD: we can become so intimately acquainted with the tiniest details of a particular recording that we begin to feel they are essential to the music, despite them being details of which the composer himself was completely unaware.]

Fixed-Medium Video

As an acousmatic composer approaching video for the first time, I experienced a comforting sense of familiarity. First, there is an obvious parallel in the methods of working: digital, computer-based non-linear editing, with all its associated techniques (cut, move, montage, layer, mix, transform and, most importantly, undo). Just as with fixed-medium sound one can engage with materials out of real time (consider, ponder, reflect, tweak, adjust, backtrack), so exactly the same possibilities exist in working with video. This is not just a similarity in working methodology, but a similarity in how that methodology affects one’s relationship with the material. Just as with fixed-medium sound one is invited to explore, probe and experiment, so with images one is drawn into discovering the latent possibilities that lie inside the image: first to find, then to seek.

The other aspect of fixed-medium sound that applies to video is repeatability, and this similarly affects the level of detail at which we can work. That which is transitory in real time can actually become very significant, and these details will reveal themselves on repeated viewing just as they will with repeated listening. A passage appears early in the piece (Example 5, 1:54–3:20) that is made up of a series of still images edited together at almost subliminal speed. On first viewing, certain images stand out (usually different ones for different people) but on repeated viewings individual images become more obvious and take on greater significance — the word “PUSH” on a door, for example, perhaps suggesting the effort that a person with dyslexia has to make to gain access to many aspects of daily life.

3. Time-Structured

Thirdly, I consider that acousmatic music is time-structured: that is to say, one thing leads to another, things happen at “the right time” and, as one of my students once put it, “There is a beginning and an end — and arguably, a middle.”

To put it another way, it is music, not just art. For me, time-structuring is at the centre of the distinction between acousmatic music and the kind of sound art one might find in a gallery, and which often has its roots in the visual or plastic arts. Such sound art may be acousmatic in a literal sense (assuming there is no visual element) but it is not music — at least, not as we understand it in the tradition of Western art music to which I consider my work to belong. In that tradition, musical discourse forms an argument which unfolds over time: one thing leads to another, and at the right time. Certainly, Western art music has sometimes experimented with non-linear temporality, but the non-linearity is usually limited in such a way as to ensure that a measure of “argument” or time-based structure remains; and despite such experiments, and even within many of them, time-structuring remains a key feature of this musical tradition — within which I would squarely place acousmatic music. Incidentally, terms such as “acousmatic art”, or the more often used but much less useful “sonic art”, are linguistically very close to “sound art”, yet they seem most often to be applied to works in which time-structuring plays a central role (musical works?) and which are thus very unlike the “sound art” of most gallery installations.

Of course, in a limited sense all sound art is time-based, since sound can’t exist without time, but time-based material doesn’t necessarily mean that the artistic conception or æsthetic function is itself time-based. In the case of many gallery sound installations, it is not usually significant when the listener arrives or leaves, or for how long they listen for. Even if it is significant, the temporal experience is not something that is shaped by the artist, but rather by the listener.

Naturally, the sound artist will have considered temporality in the making of the work: deciding whether things will happen periodically or not, how regularly and how often they will repeat, and so on. However, this will not be done in a way that leads the listener through a step-by-step, time-structured experience: establishing ideas, creating expectation, fulfilling or foiling that expectation, springing surprises, creating climaxes, revisiting material, bringing threads together, creating closure. All this is the preserve of that kind of sound art in which events are specifically ordered and shaped in time: or to use a more convenient label, music.

Time and Contextual Transformation

The time-structuring of (acousmatic) music is not just something that has structural significance on a global scale, but is powerfully active at the level of material and ideas. All sound material is context dependent simply because our perception of it is context dependent — especially temporal context — and this is particularly the case in acousmatic music, which emphasises the listener’s perception over the composer’s conception. In such music there is great significance in what has come before the sound we are hearing (and when) and what will follow (and when). A single, unchanging sine tone might not be the most interesting sonic object in itself, but in the right context (for example, as a moment of repose following an otherwise frantic texture) it can become magically transmuted simply by its position in time with respect to other events. The question of whether A follows B, or vice versa, can have a transformational effect far more powerful than any “plug-in” yet devised. When it comes to video material, precisely the same principle applies. A completely black screen may not seem a very exciting image, but a black screen at precisely the right moment and of precisely the right duration can be transformational; but only at the right moment, and for the right duration. Getting that right requires fine judgement and no little experience.

This raises an obvious problem for me, however: I am a composer, not a video artist. I may be relatively confident of my judgement of timing and pacing when it comes to sound (more or less): I control the flow of time, determine pacing, shape articulation and phrasing in time, and so on. Unfortunately, images are not sounds, and I simply had no experience in doing this sort of thing in the visual domain before starting work on LEXICON. My solution was to compose the music for the piece first and then add the video. Not only was this a rather satisfying reversal of the usual process in movie-making, but it also had the practical benefit of allowing me to organise the temporal aspects of the piece using the medium that I know best. The pre-composed sound thus acted as a temporal template for the video; one that I felt I could trust to a fair degree. The image was then added in a very closely synchronised way, always staying close to the temporality of sound, so that for the most part the image and sound appear simply as different manifestations of the same events.

To a great extent, this produced acceptable results, at least to me. There is an obvious problem with this approach however, which is that perceptual time passes differently when we are just listening compared to when we are listening and watching. This means that although my sonic temporal template was good for the most part, there were sections which became temporally distorted (usually shortened) when combined with images. An example of this occurs at the end of the piece (Example 6, 14:37–15:07) when a lengthy, static drone occurs. Heard without images, this appears to be too long (or at least, on the limits of what might be considered wise), but when combined with images (which in this case are quite active) the flow of time accelerates appreciably and the timing becomes about right. 5[5. In fact, if anything it is perhaps a little too shortened, as I was aiming for a compromise between the usual version with video and the possibility of presenting the piece as sound only, on CD for example.]

4. Gesture-Based

My fourth defining characteristic of acousmatic music is that it is “gesture-based”, not just “sound-based”. That is to say, the concept of “gesture”is a key component of the way acousmatic music deals with sound-based materials, and that this is a defining characteristic of its technical and even æsthetic approach.

“Gesture” is a deceptively simple word; in discussions of electroacoustic music it carries so many different and often contradictory meanings that one almost needs a supporting essay every time it is used, and there is not space here for that. For me, gesture in acousmatic music relates to the tendency of listeners to attribute to sounds some measure of energetic causation; if this in turn signifies some measure of human physical agency, we speak of gesture. This is gesture as defined by Denis Smalley (1997), with the exception that in my own understanding, gesture is not necessarily restricted to sounds which carry energetic forward momentum at the level of musical discourse: gesture may also include more temporally extended sounds — sustained, continuous and textural — because physical, energetic causes (and implied human physical agency) may also be sustained and continuous, or give rise to textures. Such sounds can be just as gestural as dynamic, forward moving ones.

The idea of the perceived energetic cause is something that is essential to sound, but not necessarily to sight. Sight is based on light energy, usually reflected light coming from an energy source outside the object itself (notwithstanding the obvious exceptions: fire, lightbulb, computer display and so on). This means that even if an object is completely inert, we can still see it (think of a parked car, for example). Yet we cannot hear inert objects, because hearing requires sound waves in the air directly caused by the vibrational energy of the physical mass of the object itself; and where the object is not energetically active, there is no sound (parked cars can be seen, but not heard). So while seeing an object does not necessarily tell us anything about the energy it possesses (unless we also see it move or act in some way), when we hear something we are always hearing energy, and this energy implies causation, and thus — potentially at least — gesture.

This difference between the essential gestural potential of sound versus the optional gesturality of sight is especially clearly illustrated by words and text: the sound of a spoken word always signifies the energy of diaphragm, lungs, lips, tongue and vocal tract, the energy of vibrations and of channelled and expelled air — it is “gestural”; a written word, of course, does not — it simply sits passively on the page. However, in LEXICON the idea of gesture is extended from the sonic material into the visual by giving the written text gesture-implying behaviours, energies and actions of its own: the text becomes physically present and physically active. Two simple examples illustrate this well. In the first (Example 7, 15:04–15:13), the word “page” is made to spin in a way that mimics the spinning behaviour of the sound of the spoken word “page”. 6[6. To be more accurate, the sound on its own does not particularly suggest spinning, but the image of the spinning word creates the the illusion of a spinning sound.] In a second example (Example 8, 5:42–5:50), the granulation of spoken words emphasises the energetic expulsion of air in the plosives (particularly the “p” of “problem”), and the visual letters support and reinforce this idea, appearing to be rapidly spat out under pressure.

Conclusion: In Defence of Dogma

While I hope I have illustrated some of the common ground between acousmatic and visual approaches in LEXICON, it will be obvious that acousmatic perspectives are the dominant and foundational ones in my thinking; and it will also be obvious that my thoughts on acousmatic music — what it is, what it is not, why it matters and what I am trying to do when I make it — are very clear, even dogmatic. Certainly such forthright clarity might seem surprising in the context of what is, after all, an interdisciplinary work (that is, one combining acousmatic music and video art, as well as combining art and science); it might seem that such a robust and perhaps simplistic view of what I think acousmatic music is would in some way be incompatible with undertaking an interdisciplinary endeavour. I would say it is not at all incompatible, but essential. Archimedes said: “Give me a place to stand, and I will move the world.” 7[7. Pappus of Alexandria, Synagoge, Book VIII.] Reaching out across interdisciplinary boundaries requires us to be clear about where we are reaching from, and where we stand as we do so. We need a solid foundation in our own discipline (for me, acousmatic music) and a clear understanding of what it is that we are hoping to contribute to the interdisciplinary mix, if we are to avoid creating a bland and generalised mixed-media concoction which attempts many things but excels at none. Rather than undermining my thoughts about the nature and value of acousmatic music, I have found that the fascinating experience of working with video in LEXICON has in fact served to enhance and confirm my view of the importance of working with gesture, with time-structuring, in a fixed-medium… and knowing that there is “nothing to see”.

Bibliography

Palmer, John. “High Spirits with Jonty Harrison.” 21st Century Music 9/1 (January 2002), pp. 1–4. Republished as “In Conversation with Jonty Harrison” in eContact! 10.2 — Entrevues / Interviews (August 2008) https://econtact.ca/10_2/HarrisonJo_Palmer.html

Smalley, Denis. “Spectromorphology: Explaining Sound-Shapes.” Organised Sound 2/2 (August 1997) “Frequency Domain,” pp. 107–126.

Social top