Amuse-Me

A Portable device to transform electromyographic signals into music

Background

Developers of human-computer interface technologies have recently been focusing on the use of bio-electrical signals (eye movement, electrical activity of muscles and brain) as inputs to control devices in order to increase the capacity of signal processing of the central nervous system (enhanced perception and control). The design of human-computer interfaces is intended to adapt the existing technology to human physiology with devices that are sensitive, interactive and easy to use.

Improving the quality of life through the use of medicine, education, recreation and communication is a social priority; in particular the use of games or music in rehabilitation may provide a better approach when working with children or patients with reduced cognitive abilities.

Surface electromyography (sEMG) consists of measuring the electrical activity of muscles during their contraction (Merletti et al., 2010). The control of prostheses with myoelectric signals is a current practice (Parker and Scott 1986). Some of the prostheses controlled by myoelectric signals that are available today on the market were developed in collaboration with different universities (MIT, Boston, Utah, New Brunswick, Michigan). However, the EMG signals have in most cases been used as a switching control. For example, in 1994 Dave Warner and colleagues described the development of “music devices controlled by the muscles” using the timing of muscle activation as a control to turn a device on or off.

In a study published thirty years ago (Asato et al., 1981), auditory feedback (sounds modulated in frequency by EMG) was successfully used in the neuromuscular re-education of mentally retarded patients. This use of muscle sounds in rehabilitation is interesting since it allows the patient to have an immediate idea about the intensity of the muscle contraction even on muscles where it is not possible to have a force feedback, and allows a more natural approach compared to displaying parameters on a PC monitor or on an oscilloscope.

More recently, a music interface based on biometric signals (accelerometers and joint goniometers) was used to synthesize simple modulated sounds from the position of the upper limbs (Knapp and Lusted 1990; Tanaka 2000). The interface was later improved by introducing the combined use of EMG and accelerometer signals (Tanaka and Knapp 2002; Dubost and Tanaka 2002).

In the mid-1990s, the Human Performance Institute of the California-based Loma Linda University Medical Center developed a telerobotic device, a radio-controlled car called the “BioCar” with direct bio-cybernetic control (Warner et al., 1994). Thanks to a software based on the identification of muscle activation with simple on/off controls from three muscles, a man paralyzed in an auto accident was able to navigate the BioCar through a very complicated course using the muscles of his face and arms. The same system that allowed him to control this toy car could be easily adapted to control his wheelchair or some type of robotic arm. Thus, the BioCar is a demonstration of how biosignals can be used to control real objects through computers.

The EMG signals from the flexor digitorum superficialis (FDS) and flexor carpi ulnaris (FCU) muscles have been used recently as a form of real-time control for manipulating simple geometric objects in a virtual model of the joints of the fingers and wrist (Reddy and Gupta 2007). However, despite these many experiments and developments, the use of EMG signals for controlling objects in virtual reality or in a real environment still remains a challenge.

Generation of Music with Biomedical Signals

One of the first attempts to create futuristic music using the analysis of body movement was performed by Leon Theremin, who in 1928 developed devices that automatically reacted to the movements of a dancer and as a result, produced various motifs of lights and sounds. Much later, in 1965, John Cage, David Tudor and Merce Cunningham collaborated on Variation V, a project where dancers activated sounds by interrupting the path of light beams shone across the stage with their bodies.

In 2001, Roberto Morales-Manzanares and colleagues published a scientific paper on the application of movement analysis for the generation of music. The movements of the subject’s body were detected with a “sonar” focused on the body of a dancer. Two years later, Eduardo Reck Miranda and colleagues published a study in which electroencephalographic signals were used for the first time for the generation of sounds.

Although the research in EMG has been rapidly improving and there are nowadays several companies producing and selling surface electrodes capable of detecting signals from bi-dimensional arrays of electrodes, no device was found in literature capable of generating music using EMG signals.

Objective

The objective of the research conducted at the LISiN (Laboratorio di Ingegneria del Sistema Neuromuscolare e della Riabilitazione Motoria [Laboratory of Engineering of Neuromuscular System]) in Torino, Italy, is the design and development of EMG electrodes and multichannel amplifiers. One of the projects carried on in the last years was the development of interfaces to generate sounds in real time from biomedical signals. In particular, the aim of the present work is to design and develop a device that can transform the surface EMG signal (sEMG) into a sound that is physically audible and is also recognizable by the human ear. The possibility of listening to the sound of a muscle could help doctors to produce diagnosis on the base of sound patterns that are associated with specific muscle diseases, as it is nowadays a routine with intramuscular EMG.

The innovation of the system with respect to previous attempts reported in the literature is the use of the EMG signal not as a simple trigger, but as a control, modulated in amplitude, which provides a more natural interaction with objects or musical instruments in comparison to a simple on-off control.

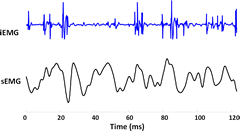

The signal traditionally used in clinical analysis is intramuscular EMG signal (iEMG) collected with needles. The sound that is obtained by connecting the intramuscular amplifier to a loudspeaker has characteristics such that they can be used to distinguish different pathologies by listening to the sound produced during muscle contraction (Sanger 2008).

When the muscle is contracted, the iEMG signal is like the sound of hail on a tin roof. This is because the bandwidth of the iEMG signal is between 100 Hz and 4 kHz and each motor unit action potential (MUAP) is usually a polyphasic waveform lasting about 3–5 ms. The sound of a single shot motor unit is therefore similar to a “click”, that is clearly distinguishable from background noise.

Figure 1 shows an example of iEMG and sEMG signals detected simultaneously from the sternocleidomastoideus muscle. We can distinguish the MUAPs and observe the different spectral components. The sound that one can hear when listening to the surface EMG signal directly without processing is low and uniform, comparable to the “sound of the sea” that can be heard by placing a large seashell close to your ear 1[1. The sound heard “inside” the seashell is in fact that of the body’s blood flow amplified by the seashell’s interior cavity.], or “the sound of the wind”. In the sEMG signal, the individual motor units cannot be distinguished, while the sound of the iEMG is like a series of clicks, where each click corresponds to a MUAP.

Methods

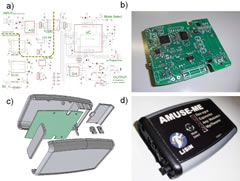

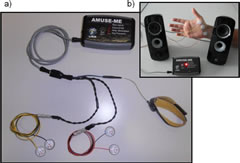

The acronym AMUSE-ME comes from the full name of the instrument: Apparecchiature per la simulazione di strumenti MUsicali pilotati da SEgnali MioElettrici [Devices for the simulation of Musical instruments controlled by Myo-Electric Signals] 2[2. This project was supported by Fondazione Giovanni Goria within the framework of a “Master dei Talenti della Società Civile.”]. The approach used to develop the device was to keep the hardware as simple as possible and use digital signal processing implemented in a microcontroller to transform the sEMG signals into sounds. AMUSE-ME was designed to be of reduced dimensions in order to be wearable with the possibility to be operated on battery power. The prototype of the device has two channels that can be connected to two muscles simultaneously and the channels are separated at the output as well (they are not mixed), which can be connected to an audio amplifier or to headphones, with muscle 1 assigned to the left channel and muscle 2 to the right.

Hardware

We decided to separate the conditioning of the EMG signal from the digital signal processing in two blocks (front end and motherboard) in order to simplify the system design:

- The front end is the part of circuit close to the electrodes, which contains the analogue electronics, the EMG signal conditioning, filtering and amplification;

- The motherboard is the part of the circuit which contains mainly the A/D and D/A converters, the microcontroller and the control buttons.

The first stage of the front end is an instrumentation amplifier. The second stage is a high-pass filter of the second order and has a cut-off frequency of 10 Hz. The third stage is a low-pass filter of the second order with cut-off frequency of 400 Hz. The overall gain of the chain is 1000, i.e. the signal can be changed from hundreds of microvolts to hundreds of millivolts.

Figure 2 shows a representation of the schematic and of the printed circuit of the motherboard of the AMUSE-ME device. The main components of the motherboard are the A/D converter, the optical isolator, the microcontroller and the D/A converter. The signals in low impedance arriving from the front end are digitally converted with 16-bit resolution and sampled at 10 kHz. The digital signals are optically isolated in order to protect the subjects from accidental shocks, then the signals are transformed into sounds with algorithms implemented in the microcontroller and eventually the digital signals are converted into analogue signals by the D/A converter and sent to an external audio amplifier.

Firmware

The algorithms developed to transform the EMG signal into audible signals were tested off-line with Matlab on previously recorded EMG signals and then tested on-line on a custom designed LabView interface. The algorithms were then implemented in a firmware and loaded on a microcontroller.

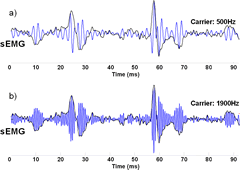

Figure 3 shows an example of amplitude modulation of sEMG signal with a carrier at two different frequencies. The frequency implemented in the firmware was set to 1 kHz.

The firmware developed to drive the system AMUSE-ME includes:

- a portion of the code for data reading and writing (from the A/D converter and the D/A converters, respectively);

- a specific portion of the code for for each mode of operation (non-linear amplification, frequency modulation, amplitude modulation, generation of a sine wave, etc.).

Eight modes of operation are implemented and can be selected by pressing a button on the front panel of the device. The output signals can be connected to an oscilloscope, to an audio amplifier or to a pair of headphones.

The eight modes are:

- Mode 1: The raw EMG signal from one muscle.

- Mode 2: The raw signal raised to the third power.

- Mode 3: The raw signal raised to the fifth power.

- Mode 4: Amplitude modulation of a 1 kHz sine wave with the raw signal from one muscle.

- Mode 5: Amplitude and frequency modulation of a sine wave with the raw signal from one muscle.

- Mode 6. Envelope of the EMG.

- Mode 7: Amplitude and frequency modulation of a sine wave with the envelope of the signal from one muscle.

- Mode 8: Myo-Theremin, a single sine wave is simultaneously amplitude modulated by one muscle signal and frequency modulated by a second muscle signal.

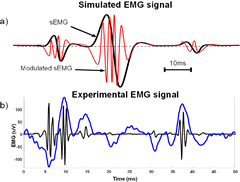

Figure 4 shows an example of signal processing for amplitude and frequency modulation from one muscle (mode 7 of the AMUSE-ME device). The processed sEMG signal resembles and sounds like an iEMG signal (a series of “clicks”, like hail on a metal roof).

Figure 5 shows the final prototype assembled and provided with electrodes, front-end, reference strip and cables. In the upper right panel the device is shown while connected to loudspeakers playing the sound of adbductor pollicis brevis.

Possible Applications of the Equipment Designed

The AMUSE-ME device can be used in many different scientific fields, from rehabilitation medicine to neurophysiology, as well as in the entertainment and art fields, where music performance practices based on sonified muscle contractions may be developed.

Aid to Clinical Neurology

A tool able to “hear” sounds similar to the clicks of individual motor units could allow a neurologist to use surface electrodes instead of needles during clinical electromyography. The methods of analysis of electromyographic signals typically carried out by neurologists are based on techniques of “pattern recognition” performed unconsciously by the brain. In fact, the doctor usually listens to the sound produced by the muscle amplifying the electrical signal taken with a needle. The human brain is indeed able to distinguish features from a sound simply associating them with known sounds, like hail on a tin roof, or the sound of a waterfall.

Rehabilitation for Children

A tool that makes the surface EMG or its transformed version audible, even in the form of musical tone (changing with the force exerted by the muscle), could help the process of rehabilitation for children, who may play with the sounds produced by their muscles: the musical aspect of the treatment could help entice them to do a particular exercise.

Construction of New Musical Instruments

The most advanced form of control using the device could be used by musicians to explore the ability to control muscle EMG and use it to modify certain aspects of musical performances in real time. A simple example could be the use of a muscle signal to drive a guitar wah-wah. A myoelectric controlled wah-wah could allow a guitarist to modulate the sound of the guitar while on the move, thereby avoiding the use of a foot pedal. Indeed, in recent decades, the massification of the music market has led to performances of musical groups with hundreds of thousands of spectators, and the stage where these musicians perform is often tens of meters long. In such cases, in the same way as with wireless microphones, exchanging stage-bound effects pedals for devices such as the AMUSE-ME would greatly benefit the musicians’ mobility on stage.

Another application could be a multimodal myo-theremin whose sound changes in frequency, amplitude, distortion, echo, tone, etc. as different muscles are contracted. In this way, a single performer could create any kind of sound using different muscles and use the neuroplasticity of the brain to improve muscle control in a variety of performance contexts.

Example of Application

A video showing a possible application of the AMUSE-ME system has been realized, in which simultaneous recordings of different muscles of the same subject are superimposed and mixed; loops are built using short portions of the mix. The video is a brief representation of only one of the possibilities of music generation using the AMUSE-ME device.

Conclusions

We developed a portable instrument that allows the transformation of the EMG signal into an audible sound, functioning in different ways. The instrument could be applied in clinical fields such as auditory feedback of EMG or in the arts for the generation of alternative electronic music.

Bibliography

Asato, Hideo, Dennis G. Twiggs and Shelley Ellison. “EMG Biofeedback Training for a Mentally Retarded Individual with Cerebral Palsy.” Physical Therapy 61/10 (October 1981), pp. 1447–1451.

Cage, John and Merce Cunningham. Variations V. Performed at Philharmonic Hall in New York City on 23 July 1965.

Dubost, Gilles and Atau Tanaka. “A Wireless, Network-based Biosensor Interface for Music.” ICMC 2002. Proceedings of the International Computer Music Conference (Gothenburg, Sweden, 2002).

Knapp, R. Benjamin and Hugh Lusted. “A Bioelectric Controller for Computer Music Applications.” Computer Music Journal 14/1 (Spring 1990) “New Performance Interfaces (1),” pp. 42–47.

Merletti, Roberto, Alberto Botter, Corrado Cescon, Marco A. Minetto and Taian M.M. Vieira. “Advances in Surface EMG: Recent Progress in Clinical Research Applications.” Critical Reviews of Biomedical Engineering 38/4 (2010), pp. 347–379.

Miranda, Eduardo Reck, Ken Sharman, Kerry Kilborn and Alexander Duncan. “On Harnessing the Electroencephalogram for the Musical Braincap.” Computer Music Journal 27/2 (Summer 2003) “Live Electronics in Recent Music of Luciano Berio,” pp. 80–102.

Morales-Manzanares, Roberto, Eduardo F. Morales, Roger B. Dannenberg and Jonathan Berger. “An Interactive Music Composition System Using Body Movements.” Computer Music Journal 25/2 (Summer 2001) “Performance Interfaces and Dynamic Modeling of Sound,” pp. 25–36.

Parker, Philip A. and Robert N. Scott. “Myoelectric Control of Prosthesis.” Critical Reviews of Biomedical Engineering 13/4 (1986) pp. 283–310.

Reddy, Narender P. and Vineet Gupta. “Toward Direct Biocontrol Using Surface EMG Signals: Control of finger and wrist joint models.” Medical Engineering & Physics 29/3 (April 2007) pp. 398–403.

Sanger. Terence D. “Use of Surface Electromyography (EMG) in the Diagnosis of Childhood Hypertonia: A Pilot Study.” Journal of Child Neurology 23/6 (June 2008), pp. 644–648.

Tanaka, Atau and R. Benjamin Knapp. “Multimodal Interaction in Music using the Electromyogram and Relative Position Sensing.” NIME 2002. Proceedings of the 2nd International Conference on New Instruments for Musical Expression (Dublin: Media Lab Europe, 24–26 May 2002), pp. 1–6.

Tanaka, Atau. “Musical Performance Practice on Sensor-based Instruments.” Trends in Gestural Control of Music. Edited by Marcelo M. Wanderley and Marc Battier. Paris: IRCAM — Centre Pompidou, 2000, pp. 389–406.

Warner, Dave, Todd Anderson and Jo Johanson. “Bio Cybernetics: A Biologically Responsive Interactive Interface — The Next Paradigm of Human Computer Interaction.” In Proceedings of Medicine Meets Virtual Reality: Interactive Technology & Healthcare — Visionary Applications for Simulation Visualization Robotics. San Diego: Aligned Management Associates; Bio-Cybernetics: a biologically responsive interactive interface. 1994; pp. 237–241.

Waters, Roger. Music from “The Body”. EMI, 1970.

Social top